A/B Testing

A/B testing compares two versions of an email campaign, or an aspect in a scenario to evaluate which performs best. With the different variants shown to your customers, you can determine which version is the most effective with data-backed evidence.

FirstHive offers A/B testing in scenarios (WhatsApp campaigns). A customer is assigned a variant that will be shown to them instantly when reaching an A/B split node in a scenario or matching the conditions for displaying WhatsApp campaign.

Process for WhatsApp

To understand the process, let’s take an example:

For a marketing campaign, you have created two banners to promote a certain product:

- Banner A

- Banner B

To decide, which banner is the best or will work for the WhatsApp campaign – you need to perform A/B Testing.

Take a certain number of users (let’s say 1000) and create two campaigns:

- Create Campaign A à Add Users Inside the Campaign A

- Create Campaign B à Attach Campaign B with Campaign A

Number of Users = 1000Take 1000 users for the campaign and these are the Complete Users.Test UsersTake 100 users as Test Users.Calculate Test Percentage (%)Take 10% of the total number of users for testingThese will be the Test Users.So, the remaining user will be 900, which will be the Last Users.1000 (Complete Users) – Test Users (100) = 900 (Last Users) |

Test Users

Here, the Test User Count = 100. Out of those 100 Test Users, add 50 users to Banner A and another 50 to Banner B.

- Banner A = 50 Users

- Banner B = 50 Users

In WhatsApp Campaign, a user (customer) can perform two things when he/she receives a WhatsApp communication:

| Campaign Type | Read | Click Link |

| Yes | Yes |

On the basis of number of Click or Read count, FirstHive calculates the Test Percentage for WhatsApp. To run the test:

- Take 50 users.

- Put equal number of users in both the campaigns (A and B), which is 25 each.

- Set the time and run the test campaign.

- After completion of the set time, calculate the percentage.

- Select the campaign that has performed better in comparison to the other one.

For example, 10 users have clicked/read the link in Campaign A and on the other hand, 25 users from Campaign B clicked/read the link. On the basis of the percentage (%), Campaign B has performed better. So, FirstHive puts the remaining users (Last Users) in Campaign B and send the final campaign.

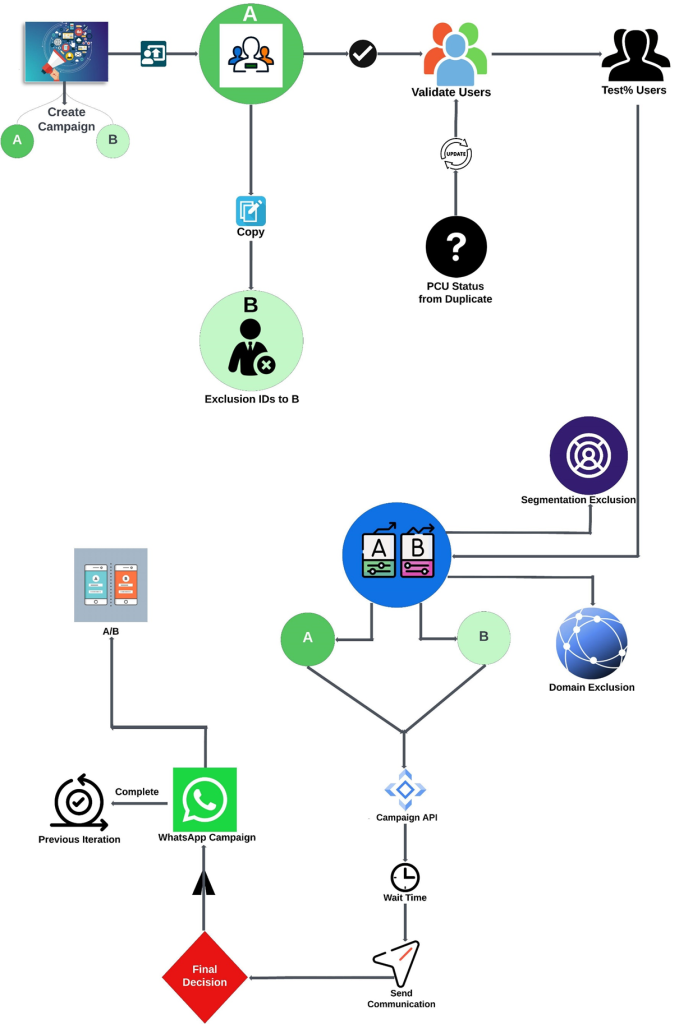

Flow

The process involves:

Step 1: Take Valid Users for the campaign (for example, Valid User Count = 1000). These campaign users include Invalid Users, Duplicate Users, and Unsubscribed Users.

Step 2: Exclude the Invalid Users, Duplicate Users, and Unsubscribed Users from those 1000 users.

For example,

- Valid User count = 1000.

- Out of these 1000 users, 100 are Invalid, Duplicate and Unsubscribed users.

- Exclude those users.

- The remaining User Count is 900. 1000 (Valid Users) – 100 (Excluded Users) = 900 (Campaign Users).

Step 3: Apply Test Percentage (%) on the Campaign Users.

For example, take Test Percentage = 10%. So, 10% of 900 = 90 (Valid Users). And, the final user count is, 900 – 90 = 810 (Final Users).

Step 4: Divide the Valid Users into equal numbers, put them in campaign A and campaign B.

For example, the valid user count = 90, add 45 users to campaign A and another 45 users to campaign B.

Step 5: Set the timer. This will start calculating the campaign performance once it crosses the set time.

Step 6: Make decision, whether Campaign A has performed better or Campaign B has performed better.

Step 7: If both the campaigns (A and B) have performed equally well, this will send an inconclusive campaign.

A/B Testing Flow for WhatsApp

Steps

Step 1: Create two Campaigns.

| For example, you need to create two campaigns for WhatsApp such as: Campaign ACampaign B |

Step 2: Put users in Campaign A.

- Copy the Exclusion IDs to Campaign B

Step 3: Validate Users

| Update the PCU Status for Duplicate: Duplicate = 10 |

Step 4: Get the Test % Users.

Step 5: Divide the users into two parts A and B.

| Do the Segment ExclusionDo the Domain Exclusion |

Step 6: Call the Campaign API.

| Call the Campaign API à Set the Wait Time |

Step 7: Set the Time and Wait.

| Set a particular Time and Wait. The wait time is 1 hour to 24 hours. |

Step 8: Calculate the Decision.

| Whether, Campaign A has performed well. Or Campaign B has performed well. |

Step 9: Select the campaign version to be sent, either A or B.

| Complete previous iterations |

Step 10: Send Final Campaign.